All of MongoDB Operator documentation

This is the multi-page printable view of this section. Click here to print.

Documentation

- 1: Overview

- 1.1: MongoDB Overview

- 1.2: MongoDB Setup

- 2: Getting Started

- 2.1: Installation

- 2.2: Standalone Setup

- 2.3: Replication Setup

- 2.4: Failover Testing

- 3: Configuration

- 3.1: MongoDB Standalone

- 3.2: MongoDB Replicated Cluster

- 4: Monitoring

- 4.1: Prometheus Monitoring

- 4.2: Grafana Dashboard

- 5: Development

- 6: Release History

- 6.1: CHANGELOG

1 - Overview

MongoDB Operator is an operator created in Golang to create, update, and manage MongoDB standalone, replicated, and arbiter replicated setup on Kubernetes and Openshift clusters. This operator is capable of doing the setup for MongoDB with all the required best practices.

Architecture

Architecture for MongoDB operator looks like this:-

Purpose

The aim and purpose of creating this MongoDB operator are to provide an easy and extensible way of deploying a Production grade MongoDB setup on Kubernetes. It helps in designing the different types of MongoDB setup like - standalone, replicated, etc with security and monitoring best practices.

Supported Features

- MongoDB replicated cluster setup

- MongoDB standalone setup

- MongoDB replicated cluster failover and recovery

- Monitoring support with MongoDB Exporter

- Password based authentication for MongoDB

- Kubernetes’s resources for MongoDB standalone and cluster

1.1 - MongoDB Overview

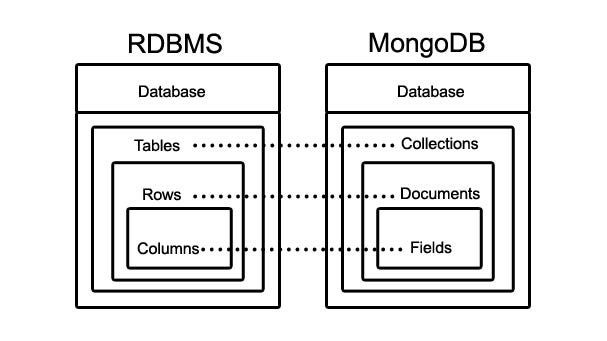

MongoDB is an open source, cross platform, document oriented NoSQL database that stores data in the form of documents and collections. A document is nothing but a record, that contains all information about itself. A group of documents, is called a collection.

A document can contain a number of fields (value), regarding the details of the record. One document size can be different from the other as two different documents can store varied number of fields. Every field (value) has an associated key mapped to it. The field can be of any data type like the general text, number, etc. A field can also be a link to another document or arrays. MongoDB uses BSON (binary encoding form of JSON), to include additional data types like date, that is not compatible with JSON.

Features

- Scalability:- MongoDB is a specialized database for BigData processing. It can contain large volumes of data, therefore making it highly scalable.

- Flexibility:- MongodB is schema-less which means it doesn’t enforce relations between fields, rather allows the storage of values of any data type, in a stream.

- Sharding:- Sharding is an interesting and a very powerful methodology in MongoDB. MongoDB allows distribution of data onto several servers, as opposed to a single server.

- Data replication and recovery:- MongoDB provides specialized tools for data replication, as a backup, in times of any system failure.

- High Performance and Speed:- MongoDB supports different features like dynamic ad-hoc querying, indexing for faster search functionality, tools like Aggregation pipeline for aggregation queries etc.

MongoDB Database

1.2 - MongoDB Setup

MongoDB is a NoSQL document database system that scales well horizontally and implements data storage through a key-value system. MongoDB can be setup in multiple mode like:-

- Standalone Mode

- Cluster replicated mode

- Cluster sharded mode

Standalone Setup

Just like any database mongodb also supports the standalone setup in which a single standalone instance is created and we setup MongoDB software on top of it. For small data chunks and development environment this setup can be ideal but in production grade environment this setup is not recommended because of the scalability and failover issues.

Replicated Setup

A replica set in MongoDB is a group of mongod processes that maintain the same data set. Replica sets provide redundancy and high availability, and are the basis for all production deployments. These Mongod processes usually run on different nodes(machines) which together form a Replica set cluster.

Sharded Setup

Sharding is a method for distributing data across multiple machines. MongoDB uses sharding to support deployments with very large data sets and high throughput operations.

- Shard: Each shard contains a subset of the sharded data. Each shard can be deployed as a replica set to provide redundancy and high availability. Together, the cluster’s shards hold the entire data set for the cluster.

- Mongos: The mongos acts as a query router, providing an interface between client applications and the sharded cluster.

- Config Servers: Config servers store metadata and configuration settings for the cluster. They are also deployed as a replica set.

2 - Getting Started

2.1 - Installation

MongoDB operator is based on the CRD framework of Kubernetes, for more information about the CRD framework please refer to the official documentation. In a nutshell, CRD is a feature through which we can develop our own custom API’s inside Kubernetes.

The API versions for MongoDB Operator available are:-

- MongoDB

- MongoDBCluster

MongoDB Operator requires a Kubernetes cluster of version >=1.16.0. If you have just started with the CRD and Operators, its highly recommended using the latest version of Kubernetes.

Setup of MongoDB operator can be easily done by using simple helm and kubectl commands.

Note

The recommded of way of installation is helm.Operator Setup by Helm

The setup can be done by using helm. The mongodb-operator can easily get installed using helm commands.

# Add the helm chart

$ helm repo add ot-helm https://ot-container-kit.github.io/helm-charts/

...

"ot-helm" has been added to your repositories

# Deploy the MongoDB Operator

$ helm install mongodb-operator ot-helm/mongodb-operator \

--namespace ot-operators

...

Release "mongodb-operator" does not exist. Installing it now.

NAME: mongodb-operator

LAST DEPLOYED: Sun Jan 9 23:05:13 2022

NAMESPACE: ot-operators

STATUS: deployed

REVISION: 1

Once the helm chart is deployed, we can test the status of operator pod by using:

# Testing Operator

$ helm test mongodb-operator --namespace ot-operators

...

NAME: mongodb-operator

LAST DEPLOYED: Sun Jan 9 23:05:13 2022

NAMESPACE: ot-operators

STATUS: deployed

REVISION: 1

TEST SUITE: mongodb-operator-test-connection

Last Started: Sun Jan 9 23:05:54 2022

Last Completed: Sun Jan 9 23:06:01 2022

Phase: Succeeded

Verify the deployment of MongoDB Operator using kubectl command.

# List the pod and status of mongodb-operator

$ kubectl get pods -n ot-operators -l name=mongodb-operator

...

NAME READY STATUS RESTARTS AGE

mongodb-operator-fc88b45b5-8rmtj 1/1 Running 0 21d

Operator Setup by Kubectl

In any case using helm chart is not a possiblity, the MongoDB operator can be installed by kubectl commands as well.

As a first step, we need to setup a namespace and then deploy the CRD definitions inside Kubernetes.

# Setup of CRDs

$ kubectl create namespace ot-operators

$ kubectl apply -f https://github.com/OT-CONTAINER-KIT/mongodb-operator/raw/main/config/crd/bases/opstreelabs.in_mongodbs.yaml

$ kubectl apply -f https://github.com/OT-CONTAINER-KIT/mongodb-operator/raw/main/config/crd/bases/opstreelabs.in_mongodbclusters.yaml

Once we have namespace in the place, we need to setup the RBAC related stuff like:- ClusterRoleBindings, ClusterRole, Serviceaccount.

# Setup of RBAC account

$ kubectl apply -f https://raw.githubusercontent.com/OT-CONTAINER-KIT/mongodb-operator/main/config/rbac/service_account.yaml

$ kubectl apply -f https://raw.githubusercontent.com/OT-CONTAINER-KIT/mongodb-operator/main/config/rbac/role.yaml

$ kubectl apply -f https://github.com/OT-CONTAINER-KIT/mongodb-operator/blob/main/config/rbac/role_binding.yaml

As last part of the setup, now we can deploy the MongoDB Operator as deployment of Kubernetes.

# Deployment for MongoDB Operator

$ kubectl apply -f https://github.com/OT-CONTAINER-KIT/mongodb-operator/raw/main/config/manager/manager.yaml

Verify the deployment of MongoDB Operator using kubectl command.

# List the pod and status of mongodb-operator

$ kubectl get pods -n ot-operators -l name=mongodb-operator

...

NAME READY STATUS RESTARTS AGE

mongodb-operator-fc88b45b5-8rmtj 1/1 Running 0 21d

2.2 - Standalone Setup

The MongoDB operator is capable for setting up MongoDB in the standalone mode with alot of additional power-ups like monitoring.

In this guide, we will see how we can set up MongoDB standalone with the help MongoDB operator and custom CRDS. We are going to use Kubernetes deployment tools like:- helm and kubectl.

Standalone architecture:

Setup using Helm Chart

Add the helm repository, so that MongoDB chart can be available for the installation. The repository can be added by:-

# Adding helm repository

$ helm repo add ot-helm https://ot-container-kit.github.io/helm-charts/

...

"ot-helm" has been added to your repositories

If the repository is added make sure you have updated it with the latest information.

# Updating ot-helm repository

$ helm repo update

Once all these things have completed, we can install MongoDB database by using:-

# Install the helm chart of MongoDB

$ helm install mongodb-ex --namespace ot-operators ot-helm/mongodb

...

NAME: mongodb-ex

LAST DEPLOYED: Mon Jan 31 20:29:54 2022

NAMESPACE: ot-operators

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: mongodb

CHART VERSION: 0.1.0

APP VERSION: 0.1.0

The helm chart for MongoDB standalone setup has been deployed.

Get the list of pods by executing:

kubectl get pods --namespace ot-operators -l app=mongodb-ex-standalone

For getting the credential for admin user:

kubectl get secrets -n ot-operators mongodb-ex-secret -o jsonpath="{.data.password}" | base64 -d

Verify the pod status and secret value by using:-

# Verify the status of the pods

$ kubectl get pods --namespace ot-operators -l app=mongodb-ex-standalone

...

NAME READY STATUS RESTARTS AGE

mongodb-ex-standalone-0 2/2 Running 0 2m10s

# Verify the secret value

$ export PASSWORD=$(kubectl get secrets -n ot-operators mongodb-ex-secret -o jsonpath="{.data.password}" | base64 -d)

$ echo ${PASSWORD}

...

G7orFUuIrajGDK1iQzoD

Setup using Kubectl Commands

It is not a recommended way for setting for MongoDB database, it can be used for the POC and learning of MongoDB operator deployment.

All the kubectl related manifest are located inside the examples folder which can be applied using kubectl apply -f.

For an example:-

$ kubectl apply -f examples/basic/standalone.yaml -n ot-operators

Validation of MongoDB Database

To validate the state of MongoDB database, we can take the shell access of the MongoDB pod.

# For getting the MongoDB container

$ kubectl exec -it mongodb-ex-standalone-0 -c mongo -n ot-operators -- bash

Execute mongodb ping command to check the health of MongoDB.

# MongoDB ping command

$ mongosh --eval "db.adminCommand('ping')"

...

Current Mongosh Log ID: 61f81de07cacf368e9a25322

Connecting to: mongodb://127.0.0.1:27017/?directConnection=true&serverSelectionTimeoutMS=2000

Using MongoDB: 5.0.5

Using Mongosh: 1.1.7

{ ok: 1 }

We can also check the state of MongoDB by listing out the stats from admin database, but it would require username and password for same.

# MongoDB command for checking db stats

$ mongosh -u $MONGO_ROOT_USERNAME -p $MONGO_ROOT_PASSWORD --eval "db.stats()"

...

Current Mongosh Log ID: 61f820ab99bd3271e034d3b6

Connecting to: mongodb://127.0.0.1:27017/?directConnection=true&serverSelectionTimeoutMS=2000

Using MongoDB: 5.0.5

Using Mongosh: 1.1.7

{

db: 'test',

collections: 0,

views: 0,

objects: 0,

avgObjSize: 0,

dataSize: 0,

storageSize: 0,

totalSize: 0,

indexes: 0,

indexSize: 0,

scaleFactor: 1,

fileSize: 0,

fsUsedSize: 0,

fsTotalSize: 0,

ok: 1

}

Create a database inside MongoDB database and try to insert some data inside it.

# create database inside MongoDB

$ use validatedb

$ db.user.insert({name: "Abhishek Dubey", age: 24})

$ db.user.insert({name: "Sajal Jain", age: 32})

...

WriteResult({ "nInserted" : 1 })

Let’s try to get out information from validatedb to see if write operation is successful or not.

$ db.user.find().pretty();

...

{

"_id" : ObjectId("61fbe07d052a532fa6842a00"),

"name" : "Abhishek Dubey",

"age" : 24

}

{

"_id" : ObjectId("61fbe1b7052a532fa6842a01"),

"name" : "Sajal Jain",

"age" : 32

}

2.3 - Replication Setup

If we are running our application inside the production environment, in that case we should always go with HA architecture. MongoDB operator can also set up MongoDB database in the replication mode where there can be one primary instance and many secondary instances.

Architecture:-

In this guide, we will see how we can set up MongoDB replicated cluster using MongoDB operator and custom CRDS.

Setup using Helm Chart

Add the helm repository, so that MongoDB chart can be available for the installation. The repository can be added by:-

# Adding helm repository

$ helm repo add ot-helm https://ot-container-kit.github.io/helm-charts/

...

"ot-helm" has been added to your repositories

If the repository is added make sure you have updated it with the latest information.

# Updating ot-helm repository

$ helm repo update

Once all these things have completed, we can install MongoDB cluster database by using:-

# Installation of MongoDB replication cluster

$ helm install mongodb-ex-cluster --namespace ot-operators ot-helm/mongodb-cluster

...

NAME: mongodb-ex-cluster

LAST DEPLOYED: Tue Feb 1 23:18:36 2022

NAMESPACE: ot-operators

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: mongodb-cluster

CHART VERSION: 0.1.0

APP VERSION: 0.1.0

The helm chart for MongoDB standalone setup has been deployed.

Get the list of pods by executing:

kubectl get pods --namespace ot-operators -l app=mongodb-ex-cluster-cluster

For getting the credential for admin user:

kubectl get secrets -n ot-operators mongodb-ex-secret -o jsonpath="{.data.password}" | base64 -d

Verify the pod status of mongodb database cluster and secret value by using:-

# Verify the status of the mongodb cluster pods

$ kubectl get pods --namespace ot-operators -l app=mongodb-ex-cluster-cluster

...

NAME READY STATUS RESTARTS AGE

mongodb-ex-cluster-cluster-0 2/2 Running 0 5m57s

mongodb-ex-cluster-cluster-1 2/2 Running 0 5m28s

mongodb-ex-cluster-cluster-2 2/2 Running 0 4m48s

# Verify the secret value

$ export PASSWORD=$(kubectl get secrets -n ot-operators mongodb-ex-cluster-secret -o jsonpath="{.data.password}" | base64 -d)

$ echo ${PASSWORD}

...

fEr9FScI9ojh6LSh2meK

Setup using Kubectl Commands

It is not a recommended way for setting for MongoDB database, it can be used for the POC and learning of MongoDB operator deployment.

All the kubectl related manifest are located inside the examples folder which can be applied using kubectl apply -f.

For an example:-

$ kubectl apply -f examples/basic/clusterd.yaml -n ot-operators

Validation of MongoDB Cluster

Once the cluster is created and in running state, we should verify the health of the MongoDB cluster.

# Verifying the health of the cluster

$ kubectl exec -it mongodb-ex-cluster-cluster-0 -n ot-operators -- bash

$ mongo -u $MONGO_ROOT_USERNAME -p $MONGO_ROOT_PASSWORD --eval "db.adminCommand( { replSetGetStatus: 1 } )"

...

{

"set" : "mongodb-ex-cluster",

"date" : ISODate("2022-02-03T08:52:09.257Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"majorityVoteCount" : 2,

"writeMajorityCount" : 2,

"votingMembersCount" : 3,

"writableVotingMembersCount" : 3,

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1643878322, 1),

"t" : NumberLong(1)

},

"lastCommittedWallTime" : ISODate("2022-02-03T08:52:02.111Z"),

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1643878322, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1643878322, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1643878322, 1),

"t" : NumberLong(1)

},

"lastAppliedWallTime" : ISODate("2022-02-03T08:52:02.111Z"),

"lastDurableWallTime" : ISODate("2022-02-03T08:52:02.111Z")

},

"lastStableRecoveryTimestamp" : Timestamp(1643878312, 1),

"electionCandidateMetrics" : {

"lastElectionReason" : "electionTimeout",

"lastElectionDate" : ISODate("2022-02-01T17:50:49.975Z"),

"electionTerm" : NumberLong(1),

"lastCommittedOpTimeAtElection" : {

"ts" : Timestamp(1643737839, 1),

"t" : NumberLong(-1)

},

"lastSeenOpTimeAtElection" : {

"ts" : Timestamp(1643737839, 1),

"t" : NumberLong(-1)

},

"numVotesNeeded" : 2,

"priorityAtElection" : 1,

"electionTimeoutMillis" : NumberLong(10000),

"numCatchUpOps" : NumberLong(0),

"newTermStartDate" : ISODate("2022-02-01T17:50:50.072Z"),

"wMajorityWriteAvailabilityDate" : ISODate("2022-02-01T17:50:50.926Z")

},

"members" : [

{

"_id" : 0,

"name" : "mongodb-ex-cluster-cluster-0.mongodb-ex-cluster-cluster.ot-operators:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 140603,

"optime" : {

"ts" : Timestamp(1643878322, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2022-02-03T08:52:02Z"),

"lastAppliedWallTime" : ISODate("2022-02-03T08:52:02.111Z"),

"lastDurableWallTime" : ISODate("2022-02-03T08:52:02.111Z"),

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1643737849, 1),

"electionDate" : ISODate("2022-02-01T17:50:49Z"),

"configVersion" : 1,

"configTerm" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "mongodb-ex-cluster-cluster-1.mongodb-ex-cluster-cluster.ot-operators:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 140490,

"optime" : {

"ts" : Timestamp(1643878322, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1643878322, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2022-02-03T08:52:02Z"),

"optimeDurableDate" : ISODate("2022-02-03T08:52:02Z"),

"lastAppliedWallTime" : ISODate("2022-02-03T08:52:02.111Z"),

"lastDurableWallTime" : ISODate("2022-02-03T08:52:02.111Z"),

"lastHeartbeat" : ISODate("2022-02-03T08:52:08.021Z"),

"lastHeartbeatRecv" : ISODate("2022-02-03T08:52:07.926Z"),

"pingMs" : NumberLong(65),

"lastHeartbeatMessage" : "",

"syncSourceHost" : "mongodb-ex-cluster-cluster-2.mongodb-ex-cluster-cluster.ot-operators:27017",

"syncSourceId" : 2,

"infoMessage" : "",

"configVersion" : 1,

"configTerm" : 1

},

{

"_id" : 2,

"name" : "mongodb-ex-cluster-cluster-2.mongodb-ex-cluster-cluster.ot-operators:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 140490,

"optime" : {

"ts" : Timestamp(1643878322, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1643878322, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2022-02-03T08:52:02Z"),

"optimeDurableDate" : ISODate("2022-02-03T08:52:02Z"),

"lastAppliedWallTime" : ISODate("2022-02-03T08:52:02.111Z"),

"lastDurableWallTime" : ISODate("2022-02-03T08:52:02.111Z"),

"lastHeartbeat" : ISODate("2022-02-03T08:52:09.022Z"),

"lastHeartbeatRecv" : ISODate("2022-02-03T08:52:07.475Z"),

"pingMs" : NumberLong(1),

"lastHeartbeatMessage" : "",

"syncSourceHost" : "mongodb-ex-cluster-cluster-0.mongodb-ex-cluster-cluster.ot-operators:27017",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1,

"configTerm" : 1

}

],

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1643878322, 1),

"signature" : {

"hash" : BinData(0,"M4Mj+BFC8//nI2QIT+Hic/N6N4g="),

"keyId" : NumberLong("7059800308947353604")

}

},

"operationTime" : Timestamp(1643878322, 1)

}

Create a database inside MongoDB database and try to insert some data inside it.

# create database inside MongoDB

$ use validatedb

$ db.user.insert({name: "Abhishek Dubey", age: 24})

$ db.user.insert({name: "Sajal Jain", age: 32})

...

WriteResult({ "nInserted" : 1 })

Note

Make sure you perform this operation on Secondary nodeLet’s try to get out information from validatedb to see if write operation is successful or not.

# Get inside the secondary pod

$ kubectl exec -it mongodb-ex-cluster-cluster-1 -n ot-operators -- bash

# Login inside the MongoDB shell

$ mongo -u $MONGO_ROOT_USERNAME -p $MONGO_ROOT_PASSWORD

List out the collection data on MongoDB secondary node by using mongo shell commands.

$ secondaryOk()

$ db.user.find().pretty();

...

{

"_id" : ObjectId("61fbe23d5b403e9fb63bc374"),

"name" : "Abhishek Dubey",

"age" : 24

}

{

"_id" : ObjectId("61fbe2465b403e9fb63bc375"),

"name" : "Sajal Jain",

"age" : 32

}

As we can see, we are able to list out the data which have been written inside the primary node is also queryable from the secondary nodes as well.

2.4 - Failover Testing

In this section, we will deactivate/delete the specific nodes (mainly primary node) to force the replica set to make an election and select a new primary node.

Before deleting/deactivating the primary node, we will try to put some dummy data inside it.

# Get inside the primary node pod

$ kubectl exec -it mongodb-ex-cluster-cluster-0 -n ot-operators -- bash

# Login inside the MongoDB shell

$ mongo -u $MONGO_ROOT_USERNAME -p $MONGO_ROOT_PASSWORD

Once we are inside the MongoDB primary pod, we will create a db called failtestdb and create a collection inside it with some dummy data.

# Creation of the MongoDB database

$ use failtestdb

# Collection creation inside MongoDB database

$ db.user.insert({name: "Abhishek Dubey", age: 24})

...

WriteResult({ "nInserted" : 1 })

Let’s check if the data is written properly or not inside the primary node.

# Find the data available inside the collection

$ db.user.find().pretty();

...

{

"_id" : ObjectId("61fc0e19a63b3bfa4f30d5e2"),

"name" : "Abhishek Dubey",

"age" : 24

}

Deactivating/Deleting MongoDB Primary and Secondary

Once we have established that the database and content is written properly inside the MongoDB database. Now let’s try to delete the primary pod to see what is the impact of it.

# Delete the primary pod

$ kubectl delete pod mongodb-ex-cluster-cluster-0 -n ot-operators

...

pod "mongodb-ex-cluster-cluster-0" deleted

When the pod is up and running, get inside the same pod and see the role assigned to that MongoDB node. Also, the replication status of the cluster.

# Login again inside the primary pod

$ kubectl exec -it mongodb-ex-cluster-cluster-0 -n ot-operators -- bash

# Login inside the MongoDB shell

$ mongo -u $MONGO_ROOT_USERNAME -p $MONGO_ROOT_PASSWORD

# Check the mongo node is still primary or not

$ db.hello()

...

{

"topologyVersion" : {

"processId" : ObjectId("61fc125bbb845ecebf0b6c1e"),

"counter" : NumberLong(4)

},

"hosts" : [

"mongodb-ex-cluster-cluster-0.mongodb-ex-cluster-cluster.ot-operators:27017",

"mongodb-ex-cluster-cluster-1.mongodb-ex-cluster-cluster.ot-operators:27017",

"mongodb-ex-cluster-cluster-2.mongodb-ex-cluster-cluster.ot-operators:27017"

],

"setName" : "mongodb-ex-cluster",

"setVersion" : 1,

"isWritablePrimary" : false,

"secondary" : true,

"primary" : "mongodb-ex-cluster-cluster-1.mongodb-ex-cluster-cluster.ot-operators:27017",

}

}

As we can see in the above command, output this pod has become the secondary pod and mongodb-ex-cluster-cluster-1 has become the primary pod of MongoDB. Also, this pod is now non-writable state. Let’s check if we can fetch out the collection data from MongoDB database.

# Change the database to failtestdb

$ use failtestdb

# Set the slave read flag of MongoDB

$ secondaryOk()

# Find the data available inside the collection

$ db.user.find().pretty();

...

{

"_id" : ObjectId("61fc0e19a63b3bfa4f30d5e2"),

"name" : "Abhishek Dubey",

"age" : 24

}

3 - Configuration

3.1 - MongoDB Standalone

MongoDB standalone configuration is easily customizable using helm as well kubectl. Since all the configurations are in the form YAML file, it can be easily changed and customized.

The values.yaml file for MongoDB standalone setup can be found here. But if the setup is not done using Helm, in that case Kubernetes manifests needs to be customized.

Parameters for Helm Chart

| Name | Value | Description |

|---|---|---|

image.name |

quay.io/opstree/mongo | Name of the MongoDB image |

image.tag |

v5.0 | Tag for the MongoDB image |

image.imagePullPolicy |

IfNotPresent | Image Pull Policy of the MongoDB |

image.pullSecret |

"" | Image Pull Secret for private registry |

resources |

{} | Request and limits for MongoDB statefulset |

storage.enabled |

true | Storage is enabled for MongoDB or not |

storage.accessModes |

[“ReadWriteOnce”] | AccessMode for storage provider |

storage.storageSize |

1Gi | Size of storage for MongoDB |

storage.storageClass |

gp2 | Name of the storageClass to create storage |

mongoDBMonitoring.enabled |

true | MongoDB exporter should be deployed or not |

mongoDBMonitoring.image.name |

bitnami/mongodb-exporter | Name of the MongoDB exporter image |

mongoDBMonitoring.image.tag |

0.11.2-debian-10-r382 | Tag of the MongoDB exporter image |

mongoDBMonitoring.image.imagePullPolicy |

IfNotPresent | Image Pull Policy of the MongoDB exporter image |

serviceMonitor.enabled |

false | Servicemonitor to monitor MongoDB with Prometheus |

serviceMonitor.interval |

30s | Interval at which metrics should be scraped. |

serviceMonitor.scrapeTimeout |

10s | Timeout after which the scrape is ended |

serviceMonitor.namespace |

monitoring | Namespace in which Prometheus operator is running |

nodeSelector |

{} | Nodeselector for the MongoDB statefulset |

priorityClassName |

"" | Priority class name for the MongoDB statefulset |

affinity |

{} | Affinity for node and pods for MongoDB statefulset |

tolerations |

[] | Tolerations for MongoDB statefulset |

securityContext |

{} | Security Context for MongoDB pod like:- fsGroup |

Parameters for CRD Object Definition

These are the parameters that are currently supported by the MongoDB operator for the standalone MongoDB database setup:-

- kubernetesConfig

- storage

- mongoDBSecurity

- mongoDBMonitoring

kubernetesConfig

kubernetesConfig is the general configuration paramater for MongoDB CRD in which we are defining the Kubernetes related configuration details like- image, tag, imagePullPolicy, and resources.

kubernetesConfig:

image: quay.io/opstree/mongo:v5.0

imagePullPolicy: IfNotPresent

resources:

requests:

cpu: 1

memory: 8Gi

limits:

cpu: 1

memory: 8Gi

imagePullSecret: regcred

NodeSelector:- nodeSelector is the simplest recommended form of node selection constraint. nodeSelector is a field of PodSpec. It specifies a map of key-value pairs.

kubernetesConfig:

nodeSelector:

beta.kubernetes.io/os: linux

Affinity:- The affinity/anti-affinity feature, greatly expands the types of constraints you can express. The affinity/anti-affinity language is more expressive. The language offers more matching rules besides exact matches created with a logical AND operation.

kubernetesConfig:

mongoAffinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

PriorityClassName:- A PriorityClass is a non-namespaced object that defines a mapping from a priority class name to the integer value of the priority. The name is specified in the name field of the PriorityClass object’s metadata. The value is specified in the required value field.

kubernetesConfig:

priorityClassName: system-node-critical

Tolerations:- Tolerations are applied to pods, and allow (but do not require) the pods to schedule onto nodes with matching taints.

kubernetesConfig:

tolerations:

- key: "example-key"

operator: "Exists"

effect: "NoSchedule"

SecurityContext:- A security context defines privilege and access control settings for a Pod or Container. The security settings that you specify for a Pod apply to all Containers in the Pod.

securityContext:

fsGroup: 1001

storage

storage is the storage specific configuration for MongoDB CRD. With this parameter we can make enable persistence inside the MongoDB statefulset. In this parameter, we will provide inputs like- accessModes, size of the storage, and storageClass.

storage:

accessModes: ["ReadWriteOnce"]

storageSize: 1Gi

storageClass: csi-cephfs-sc

mongoDBSecurity

mongoDBSecurity is the security specification for MongoDB CRD. If we want to enable our MongoDB database authenticated, in that case, we can enable this configuration. To enable the authentication we need to provide paramaters like- admin username, secret reference in Kubernetes.

mongoDBSecurity:

mongoDBAdminUser: admin

secretRef:

name: mongodb-secret

key: password

mongoDBMonitoring

mongoDBMonitoring is the monitoring feature for MongoDB CRD. By using this parameter we can enable the MongoDB monitoring using MongoDB Exporter. In this parameter, we need to provide image, imagePullPolicy and resources for mongodb exporter.

mongoDBMonitoring:

enableExporter: true

image: bitnami/mongodb-exporter:0.11.2-debian-10-r382

imagePullPolicy: IfNotPresent

resources: {}

3.2 - MongoDB Replicated Cluster

MongoDB cluster configuration is easily customizable using helm as well kubectl. Since all the configurations are in the form YAML file, it can be easily changed and customized.

The values.yaml file for MongoDB cluster setup can be found here. But if the setup is not done using Helm, in that case Kubernetes manifests needs to be customized.

Parameters for Helm Chart

| Name | Value | Description |

|---|---|---|

clusterSize |

3 | Size of the MongoDB cluster |

image.name |

quay.io/opstree/mongo | Name of the MongoDB image |

image.tag |

v5.0 | Tag for the MongoDB image |

image.imagePullPolicy |

IfNotPresent | Image Pull Policy of the MongoDB |

image.pullSecret |

"" | Image Pull Secret for private registry |

resources |

{} | Request and limits for MongoDB statefulset |

storage.enabled |

true | Storage is enabled for MongoDB or not |

storage.accessModes |

[“ReadWriteOnce”] | AccessMode for storage provider |

storage.storageSize |

1Gi | Size of storage for MongoDB |

storage.storageClass |

gp2 | Name of the storageClass to create storage |

mongoDBMonitoring.enabled |

true | MongoDB exporter should be deployed or not |

mongoDBMonitoring.image.name |

bitnami/mongodb-exporter | Name of the MongoDB exporter image |

mongoDBMonitoring.image.tag |

0.11.2-debian-10-r382 | Tag of the MongoDB exporter image |

mongoDBMonitoring.image.imagePullPolicy |

IfNotPresent | Image Pull Policy of the MongoDB exporter image |

serviceMonitor.enabled |

false | Servicemonitor to monitor MongoDB with Prometheus |

serviceMonitor.interval |

30s | Interval at which metrics should be scraped. |

serviceMonitor.scrapeTimeout |

10s | Timeout after which the scrape is ended |

serviceMonitor.namespace |

monitoring | Namespace in which Prometheus operator is running |

nodeSelector |

{} | Nodeselector for the MongoDB statefulset |

priorityClassName |

"" | Priority class name for the MongoDB statefulset |

affinity |

{} | Affinity for node and pods for MongoDB statefulset |

tolerations |

[] | Tolerations for MongoDB statefulset |

securityContext |

{} | Security Context for MongoDB pod like:- fsGroup |

Parameters for CRD Object Definition

These are the parameters that are currently supported by the MongoDB operator for the cluster MongoDB database setup:-

- clusterSize

- kubernetesConfig

- storage

- mongoDBSecurity

- mongoDBMonitoring

clusterSize

clusterSize is the size of MongoDB replicated cluster. We have to provide the number of node count that we want to make part of MongoDB cluster. For example:- 1 primary and 2 secondary is 3 as pod count.

clusterSize: 3

kubernetesConfig

kubernetesConfig is the general configuration paramater for MongoDB CRD in which we are defining the Kubernetes related configuration details like- image, tag, imagePullPolicy, and resources.

kubernetesConfig:

image: quay.io/opstree/mongo:v5.0

imagePullPolicy: IfNotPresent

resources:

requests:

cpu: 1

memory: 8Gi

limits:

cpu: 1

memory: 8Gi

imagePullSecret: regcred

NodeSelector:- nodeSelector is the simplest recommended form of node selection constraint. nodeSelector is a field of PodSpec. It specifies a map of key-value pairs.

kubernetesConfig:

nodeSelector:

beta.kubernetes.io/os: linux

Affinity:- The affinity/anti-affinity feature, greatly expands the types of constraints you can express. The affinity/anti-affinity language is more expressive. The language offers more matching rules besides exact matches created with a logical AND operation.

kubernetesConfig:

mongoAffinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

PriorityClassName:- A PriorityClass is a non-namespaced object that defines a mapping from a priority class name to the integer value of the priority. The name is specified in the name field of the PriorityClass object’s metadata. The value is specified in the required value field.

kubernetesConfig:

priorityClassName: system-node-critical

Tolerations:- Tolerations are applied to pods, and allow (but do not require) the pods to schedule onto nodes with matching taints.

kubernetesConfig:

tolerations:

- key: "example-key"

operator: "Exists"

effect: "NoSchedule"

SecurityContext:- A security context defines privilege and access control settings for a Pod or Container. The security settings that you specify for a Pod apply to all Containers in the Pod.

securityContext:

fsGroup: 1001

storage

storage is the storage specific configuration for MongoDB CRD. With this parameter we can make enable persistence inside the MongoDB statefulset. In this parameter, we will provide inputs like- accessModes, size of the storage, and storageClass.

storage:

accessModes: ["ReadWriteOnce"]

storageSize: 1Gi

storageClass: csi-cephfs-sc

mongoDBSecurity

mongoDBSecurity is the security specification for MongoDB CRD. If we want to enable our MongoDB database authenticated, in that case, we can enable this configuration. To enable the authentication we need to provide paramaters like- admin username, secret reference in Kubernetes.

mongoDBSecurity:

mongoDBAdminUser: admin

secretRef:

name: mongodb-secret

key: password

mongoDBMonitoring

mongoDBMonitoring is the monitoring feature for MongoDB CRD. By using this parameter we can enable the MongoDB monitoring using MongoDB Exporter. In this parameter, we need to provide image, imagePullPolicy and resources for mongodb exporter.

mongoDBMonitoring:

enableExporter: true

image: bitnami/mongodb-exporter:0.11.2-debian-10-r382

imagePullPolicy: IfNotPresent

resources: {}

4 - Monitoring

4.1 - Prometheus Monitoring

In MongoDB Operator, we are using mongodb-exporter to collect the stats, metrics for MongoDB database. This exporter is capable to capture the stats for standalone and cluster mode of MongoDB.

MongoDB Monitoring

If we are using the helm chart for installation purpose, we can simply enable this configuration inside the values.yaml.

mongoDBMonitoring:

enabled: true

image:

name: bitnami/mongodb-exporter

tag: 0.11.2-debian-10-r382

imagePullPolicy: IfNotPresent

resources: {}

In case of kubectl installation, we can add a code snippet in yaml manifest like this:-

mongoDBMonitoring:

enableExporter: true

image: bitnami/mongodb-exporter:0.11.2-debian-10-r382

imagePullPolicy: IfNotPresent

resources: {}

ServiceMonitor for Prometheus Operator

Once the exporter is configured, the next aligned task would be to ask Prometheus to monitor it. For Prometheus Operator, we have to create CRD object in Kubernetes called “ServiceMonitor”. We can update this using the helm as well.

serviceMonitor:

enabled: false

interval: 30s

scrapeTimeout: 10s

namespace: monitoring

For kubectl related configuration, we may have to create ServiceMonitor definition in a yaml file and apply it using kubectl command. A ServiceMonitor definition looks like this:-

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: mongodb-prometheus-monitoring

labels:

app.kubernetes.io/name: mongodb

app.kubernetes.io/managed-by: mongodb

app.kubernetes.io/instance: mongodb

app.kubernetes.io/version: v0.1.0

app.kubernetes.io/component: middleware

spec:

selector:

matchLabels:

app: mongodb

mongodb_setup: standalone

role: standalone

endpoints:

- port: metrics

interval: 30s

scrapeTimeout: 30s

namespaceSelector:

matchNames:

- middleware-production

MongoDB Alerting

Since we are using MongoDB exporter to capture the metrics, we are using the queries available by that exporter to create alerts as well. The alerts are available inside the alerting directory.

Similar to ServiceMonitor, there is another CRD object is available through which we can create Prometheus rules inside Kubernetes cluster using Prometheus Operator.

Example:-

---

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

labels:

app: prometheus-mongodb-rules

name: prometheus-mongodb-rules

spec:

groups:

- name: mongodb

rules:

- alert: MongodbDown

expr: mongodb_up == 0

for: 0m

labels:

severity: critical

annotations:

summary: MongoDB Down (instance {{ $labels.instance }})

description: "MongoDB instance is down\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"

Alerts description:-

| AlertName | Description |

|---|---|

| MongoDB Down | MongoDB instance is down |

| MongoDB replication lag | Mongodb replication lag is more than 10s |

| MongoDB replication Status 3 | MongoDB Replication set member either perform startup self-checks, or transition from completing a rollback or resync |

| MongoDB replication Status 6 | MongoDB Replication set member as seen from another member of the set, is not yet known |

| MongoDB number cursors open | Too many cursors opened by MongoDB for clients (> 10k) |

| MongoDB cursors timeouts | Too many cursors are timing out |

| MongoDB too many connections | Too many connections (> 80%) |

| MongoDB virtual memory usage | High memory usage on MongoDB |

4.2 - Grafana Dashboard

MongoDB grafana dashboard can be found inside monitoring directory.

The list of available dashboards are:-

Screenshots

5 - Development

5.1 - Development Guide

Pre-requisites

Access to Kubernetes cluster

First, you will need access to a Kubernetes cluster. The easiest way to start is minikube.

Tools to build an Operator

Apart from kubernetes cluster, there are some tools which are needed to build and test the MongoDB Operator.

Building Operator

To build the operator on local system, we can use make command.

$ make manager

...

go build -o bin/manager main.go

MongoDB operator gets packaged as a container image for running on the Kubernetes cluster.

$ make docker-build

If you want to play it on Kubernetes. You can use a minikube.

$ minikube start --vm-driver virtualbox

...

😄 minikube v1.0.1 on linux (amd64)

🤹 Downloading Kubernetes v1.14.1 images in the background ...

🔥 Creating kvm2 VM (CPUs=2, Memory=2048MB, Disk=20000MB) ...

📶 "minikube" IP address is 192.168.39.240

🐳 Configuring Docker as the container runtime ...

🐳 Version of container runtime is 18.06.3-ce

⌛ Waiting for image downloads to complete ...

✨ Preparing Kubernetes environment ...

🚜 Pulling images required by Kubernetes v1.14.1 ...

🚀 Launching Kubernetes v1.14.1 using kubeadm ...

⌛ Waiting for pods: apiserver proxy etcd scheduler controller dns

🔑 Configuring cluster permissions ...

🤔 Verifying component health .....

💗 kubectl is now configured to use "minikube"

🏄 Done! Thank you for using minikube!

$ make test

5.2 - Continous Integration Pipeline

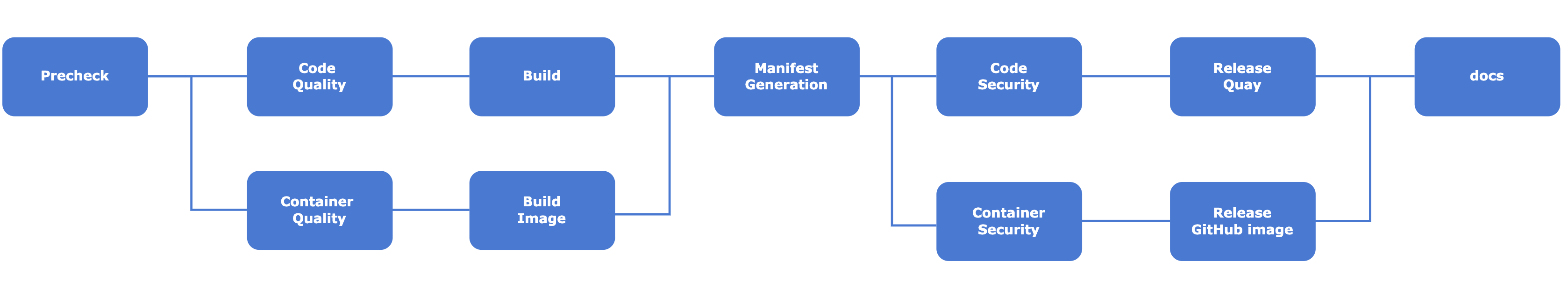

We are using Azure DevOps pipeline for the Continous Integration in the MongoDB Operator. It checks all the important checks for the corresponding Pull Request. Also, this pipeline is capable of making releases on Quay, Dockerhub, and GitHub.

The pipeline definition can be edited inside the .azure-pipelines.

Tools used for CI process:-

- Golang —> https://go.dev/

- Golang CI Lint —. https://github.com/golangci/golangci-lint

- Hadolint —> https://github.com/hadolint/hadolint

- GoSec —> https://github.com/securego/gosec

- Trivy —> https://github.com/aquasecurity/trivy

6 - Release History

6.1 - CHANGELOG

v0.2.0

Feburary 16, 2022

🏄 Features

- Added ImagePullSecret support

- Added affinity and nodeSelector capability

- Added toleration and priorityClass support

- Updated document with CI process information

🪲 Bug Fixes

- Fixed the patching Kubernetes resources

v0.1.0

Feburary 5, 2022

🏄 Features

- Added standalone MongoDB functionality

- Added cluster MongoDB functionality

- Helm chart installation support

- Monitoring support for MongoDB

- Documentation for setup and management